Wrapping insecure apps in Cloudflare Workers

Sometimes you get one of those problems handed to you with a priority of “this has to happen yesterday”. A client uses an application that generates confidential content, and provides a world-readable URL to that processed data. This permits anyone with the URL to access to all that content — no usernames or passwords required. This doesn't seem insecure at first glance as a third party would have to guess a 48 character document number or otherwise gleam this ID from their browser…. this is unless the user pastes that link into an email or Slack…. or Twitter.

The remit was

-

To ensure that sharing any content links would not disclose content, including those loaded from rendered CSS (such as included image files).

-

No changes to frontend or backend code as this was a third party integration.

-

The content loads (via a series of REST requests) into an IFRAME which introduces issues with cross origin permissions, in addition to half the content being fetch initiated from a complex and large Javascript application, and the rest being included and included in style sheets. This makes using an interceptor and bearer token hard.

-

It had to be done yesterday (my favourite type of deadline, honestly)

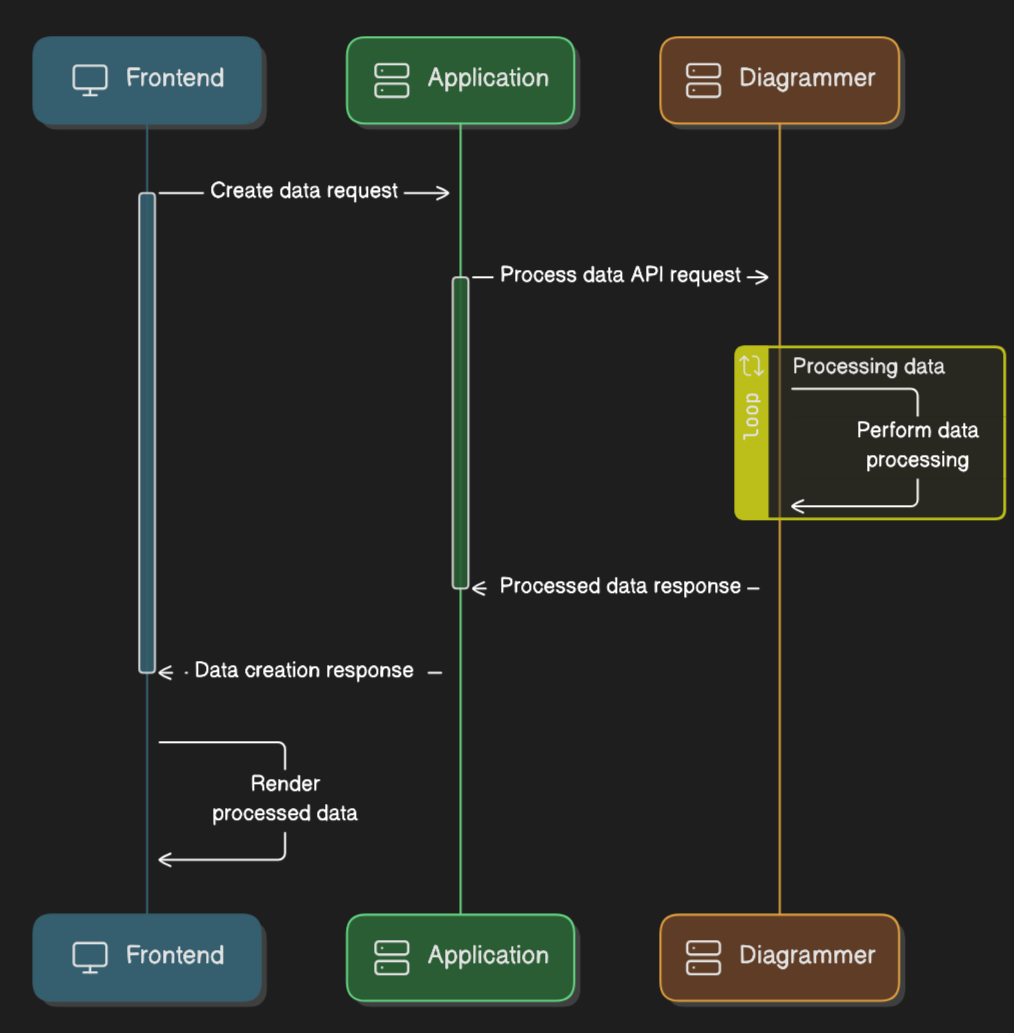

Our application, let’s call it Diagrammer provides several Restful API endpoints for generating content in the following process flow, all of this without authorisation or access controls.

-

The frontend requests the processing of specific files by sending a request to the application backend.

-

The backed application validates the user has access to the requested documents and then has Diagrammer process them.

-

Diagrammer generates the requested content and provides the application backend with a URL for that content which it passes back to the frontend. The frontend creates an IFRAME and populates it with the URL. This is a one time token and any further request to generate the same content will get a different token.

Cloudflare Workers to the rescue

By placing Diagrammer on the Cloudflare network, I was able to intercept, transform, and control the flow of all its traffic using the Workers platform. This gave me enough to do what was needed until the customer’s Engineering team has time to implement controls via other means.

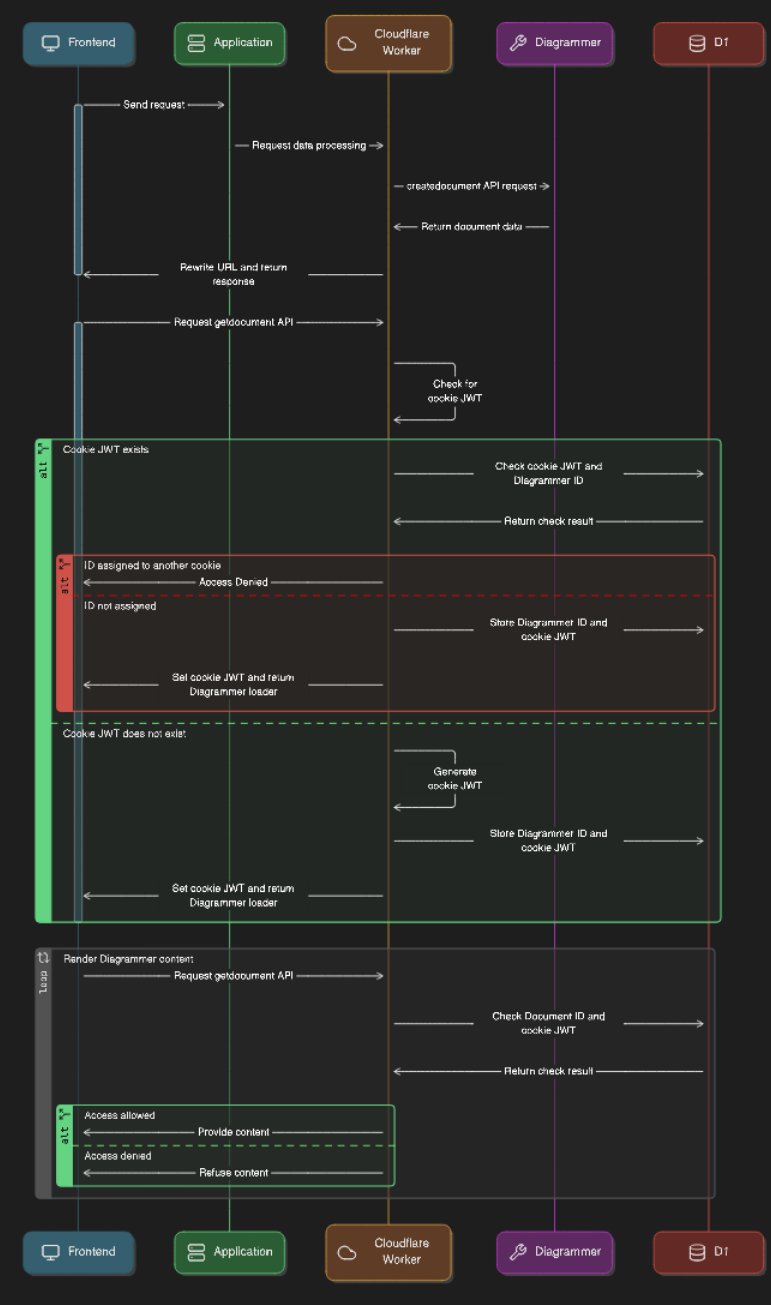

The new flow would work as follows:

-

Intercept the request from the application backend to the Diagrammer server to process the request, do what is needed, and then rewrite the response to the host name of the Cloudflare Worker.

-

With all requests going through the Cloudflare Worker, we can now process and validate them.

The Magic

After a couple of hours of thinking and testing possibilties in a sandbox, I decided on the following approach that forces all traffic through Workers to validate a cookie after discovering that content would leak with a hook on fetch to add security headers.

-

Check if the frontend has a JWT cookie, one that is limited in scope to the origin of the IFRAME. If not then generate a token.

-

Check if the document ID (remember, a 48 character token) has been issued to a token. This is stored in Cloudflare D1 as it's simplier than an implemenation in Durable Objects.

-

If this document ID has been issued then consider it consumed and return Access Denied.

-

Record the Document ID to token relationship in D1.

-

Get the content from the Diagrammer server, add a

set-cookieheader to response to the Frontend which renders in the IFRAME. -

Every request for resources will then pass through this Worker which verifies access a Document ID/token pairing in D1.

The code

The code is basic (which is not complete here) flows to three main routes.

-

The

/api/createdocumentendpoint which returns a URL to where the processed result can be located. -

The

/api/showdocument/{id}page that is returned from the above request which is an application loader. -

The

/api/showdocument/{id}/resourcesendpoint which is all the content that the loader page pulls in either directly (Javascript, HTML, CSS) or images and fonts (included in CSS).

The code behind the routes is simple regex testing on the pathname, with each route having a setting that forces the router to test for the cookie.

let url = new URL(request.url);

url.pathname = url.pathname.replaceAll("//", "/");

let route;

for (let key in routes[request.method]) {

let regex = new RegExp(key);

if (regex.test(url.pathname)) {

route = routes[request.method][key];

break;

}

}

if (route) {

if (route.requireSessionValidation && !await isSessionValid(url, request, env, route)) {

return new Response("Access Denied", { status: 403 });

}

return await route.handler(url, request, env);

}

return new Response("404", { status: 404 });

If the route exists and the requireSessionValidation flag is set then it tests if the session cookie is valid

async function isSessionValid(url, request, env, route) {

// get the 48 charcter ID from the URL

const documentId = documentIdFromUrl(url);

if (!documentId && route.validationRequiresDocumentId) {

return false ;

}

const userGuid = getCookie(request.headers.get("Cookie"), env.COOKIE_NAME);

if (! isCookieJwtValid(userGuid)) {

return false;

}

dbResult = await env.DB.prepare("select count(1) as rowCount FROM document_sessions_v1 WHERE document_id = ? and user_guid = ?")

.bind(await sha256(documentId), await sha256(userGuid))

.run();

return (dbResult.results[0].rowCount > 0);

}

^/api/createdocument$

This is a simple proxy with a header rewrite as the location passed to the application loader is returned in the location header

"^/api/createdocument$": {

handler: async function (url, request, env) {

const proxyUrl = new URL(url);

proxyUrl.hostname = env.SERVER_BACKEND;

let newRequest = new Request(

proxyUrl.toString(),

new Request(request)

);

const originalResponse = await fetch(newRequest);

// check all the headers if location exists and rewrite it

if (originalResponse.headers.has("Location")) {

const locationHeader = originalResponse.headers.get("Location");

let response = new Response("", {

status: originalResponse.status,

headers: originalResponse.headers

});

let redirectUrl = new URL(locationHeader);

// update the location so that its hostname is the cloudflare worker

redirectUrl.hostname = url.hostname;

response.headers.set("Location", redirectUrl.toString());

return new Response(response, new Headers(response.headers))

}

return new Response("Bad Request", { status: 400 });

}

}

^/api/showdocument/[a-f0-9]{48}$

The majority of the processing is performed in this route. This code is pseudo-code for the best part. This route returns the application loader with relative path names so no content is rewritten.

"^/api/showdocument/[a-f0-9]{48}$": {

handler: async function (url, request, env) {

let documentId = documentIdFromUrl(url);

if (!documentId) {

return new Response("Access Denied", { status: 403 });

}

// do we have a cookie GUID (from a previous session)

let userGuid = getCookie(request.headers.get("Cookie"), env.COOKIE_NAME);

let setCookieFlag = false;

if (!isCookieJwtValid(userGuid)) {

userGuid = await generateSignedJwtToken();

// get the FLAG so for the cookie to be set in the header but ONLY if the url/info returns a 200;

setCookieFlag = true;

}

// never store the actual document id

const documentIdSha = await sha256(documentId);

// has anyone (even us) consumed this document ID? this mitigates replay following header theft

let dbResult = await env.DB.prepare("select count(1) as document_count FROM document_sessions_v1 WHERE document_id = ?");

.bind(documentIdSha)

.run();

// someone has consumed this one-time document id so deny

if (dbResult.results[0].document_count > 0) {

return new Response("Access Denied", { status: 403 });

}

// if we get here then we can insert the session into the DB

await env.DB.prepare("INSERT INTO document_sessions_v1 (document_id, user_guid) VALUES (?, ?)")

.bind(documentIdSha, await sha256(userGuid))

.run();

proxyUrl = new URL(url);

// fetch from the diagrammer application backend

proxyUrl.hostname = env.SERVER_BACKEND;

newRequest = new Request(

proxyUrl.toString(),

new Request(request)

);

response = await fetch(newRequest);

// do NOT set a cookie if we dont have a valid Diagrammer loader

let newResponseHeaders = new Headers(response.headers);

if (response.status == 200) {

let originalBody = await response.text();

if (setCookieFlag) {

// this cookie samesite MUST be none or the iframe refuses to set it.

// partitioned (supported in chrome and in nightly firefox - not safari with tracking protection on)

const tokenCookie = `${env.COOKIE_NAME}=${userGuid}; path=/; secure; HttpOnly; SameSite=None; Partitioned; __Host-iapp=${env.NAMESPACE_ENV}`;

newResponseHeaders.set("Set-Cookie", tokenCookie);

}

return new Response(originalBody, response, newResponseHeaders);

}

return new Response("Bad Request", { status: 400 });

}

}

^/api/showdocument/[a-f0-9]{48}/(.*)

This endpoint has the cookie and the document ID verified before running as a proxy to the Diagrammer server. It rewrites the URLs in HTML and CSS so that they’re changed to the Cloudflare worker

"^/api/showdocument/[a-f0-9]{48}/(.*)": {

// this endpoint servers resources that MUST be session validated

// and before the handler is called, the validationRequiresDocumentId validates an id

requireSessionValidation: true,

validationRequiresDocumentId: true,

handler: async function (url, request, env) {

const proxyUrl = new URL(url);

const originalHostname = proxyUrl.hostname;

proxyUrl.hostname = env.SERVER_BACKEND;

let newRequest = new Request(

proxyUrl.toString(),

new Request(request)

);

// get the content from Diagrammer

let response = await fetch(newRequest);

// the html and css needs the URLs replaced

if (response.headers.get("Content-Type").includes("text/css") || response.headers.get("Content-Type").includes("text/html")) {

let body = await response.text();

return new Response(body.replaceAll(env.SERVER_BACKEND, originalHostname), response);

}

return new Response(response)

}

}

Here, resources is any resource, either the application.js application.css resource/images_001.png etc, whatever the Diagrammer application generates and embeds into HTML or CSS with each resource then having its cookie tested.

Our application will now only serve requests to the first client that requests this content. Any further requests will result in access being denied. It's not a foolproof method of preventing information disclosure but it was enough to pass testing as covered by the agreed statement of works.

The Costs

We have a Workers paid subscription in Cloudflare which allows for 25 billion read rows per month and 50 million row writes per month before a cost is incurred

Diagrammer is accessed about 20 times a hour on average of 10 HTTP reqeusts for content per diagram render. The costs of D1 and Workers falls well within the free allowance.

The Workers code itself is extremely lighweight with most of the time taken in the async fetch which you don't pay for while it's in an awaiting state. The highest CPU time is spent in the SHA calcluations which are provided in the extremely fast embedded crypto libraries. With the number of requests required here, we fall well within the allowance provided before incurring more cost.

To give you an idea of Workers pricing, the API for StopForumSpam clocks up 400 to 500 requests per second for a cost of $400 to $500 a month.

The overall price from Diagrammer will be no more than then $5 subscription price per month.

What could be done (a lot) better

-

The application backend could proxy all requests to Diagrammer but this wasn’t really an option given the timescales of their SDLC. As front end could still leak files included in CSS as it has no mechanism (without using a cookie) for checking these files.

-

The Worker can generate a signed token which frontend can feed into a modified/intermediate IFRAME loader via the

postmessageAPI. This removes any issues with someone intercepting (or guessing) the URL in the seconds between it being generated and then consumed by the Frontend. Thepostmessagetokens would then use theStorage APIas Safari is seriously lacking in its support for cookie partitioning. -

Inject some scripts into the HTML so that access errors display with an friendly error message.

-

Use a global variable LRU/array for storing paired tokens so that routes to the same isolate don't have to query D1 again.

-

My code above, it’s just awful but it was rushed before a pentesting deadline but it does show how quickly something can be spun up.